Welcome! Machine Yearning is a semi-regular update on high and low-profile developments in artificial intelligence, focusing on actionable tips and stories for executives, investors, and project managers.

I kicked off this project as an extension of a time-honored tradition where every Thanksgiving, a family member asks me “what is AI? When are the machines coming to get us?”

AI as a concept can often seem intimidating to folks with non-technical backgrounds, and we tend to fear what we don’t understand. They’ll usually fill in the gaps with pumped up expectations and dystopic sci-fi imagery.

In my work leading applied machine learning projects at institutions big and small, I’ve run into many versions of the same Thanksgiving problem. Simply put, there’s not enough people speaking the same language.

The goal of this newsletter is to bridge that gap by providing relevance, commentary, and deep-dives for the 90% of institutions who have heard about AI but don’t yet know where to start. It’s the intersection between AI, business, and product strategy. If this sounds like something you’re into, read on!

🔬Long Take: Knowledge Gaps

Let’s get one thing out of the way: machine learning is a toolkit like any other. What’s more important than knowing how to use a tool is to know when not to use it. You don’t need an advanced degree to know when to use a hammer, and you don’t need to know how the hammer was designed, either. Just what it’s for.

These knowledge gaps manifest in a few different forms:

The expertise problem: When the quants are too far away from the customer to understand problems in context. In my work, the most common ask I hear from engineers and scientists is to hear the customer’s perspective more often.

The marketing problem: When client-facing personnel are not well-versed enough in technical concepts to concisely explain the value-add. This is one of the most common problems in fundraising.

The trickle-down problem: When an acquired AI startup doesn’t effectively grease the wheels of the inertia-laden parent company, stagnates, and the talent is either bought out, returns to academia, or both. Ultimately the acquisition is written off. More on this in a future issue.

These problems are so pervasive that according to a BCG/MIT joint study, only around 10% of businesses have actually seen “any significant financial benefits” from applied AI. According to the report, the reasons for failure are nearly always preventable, not technical: a lack of alignment, clear goal-setting, and leadership buy-in. The marketing problem is at the center of each of those failure points.

This doesn’t stop at the enterprise level. Sometimes, the misunderstanding is so pervasive that it spreads across the entire industry as runaway hype train, derailing otherwise perfectly competent portfolio companies for not living up to sky-high expectations.

A Tale of Two Curves

To illustrate this, I want to highlight a particularly common case where investors and practitioners disagree on what should be considered reasonable progress.

ARK’s “Big Ideas 2021”

ARK is an asset manager that structures its investment strategies around themes linked to “disruptive innovation.” They have a podcast I listen to called FYI - For Your Innovation, and recently released their “Big Ideas 2021” report, which highlights some of their favorite investment themes for the upcoming year.

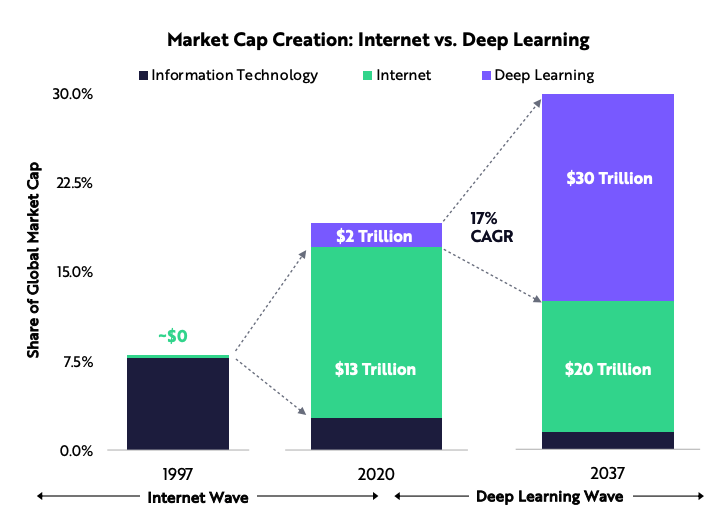

This year, they extensively focus on deep learning (the parfum du jour of machine learning methods) and share high expectations for its economic contributions in the coming years. In particular, they expect deep learning applications to grow from an estimated $2 trillion in contribution to equity market capitalizations (as of 2020) to a whopping $30 trillion over the next two decades.

This massive “deep learning wave” is contingent in part on the expected performance enhancements from deep learning applications. Like many others, ARK projects a familiar curve resembling Moore’s Law.

Projections like these are a good example of the marketing problem. Yes, some deep learning models are getting exponentially bigger, and if you were to take this to its logical conclusion, it’s only a matter of time before deep learning truly eats the world, with economically uncompetitive humans living off scraps. But is size on its own tantamount to performance? Not exactly.

Starsky Robotics’ Swan Song

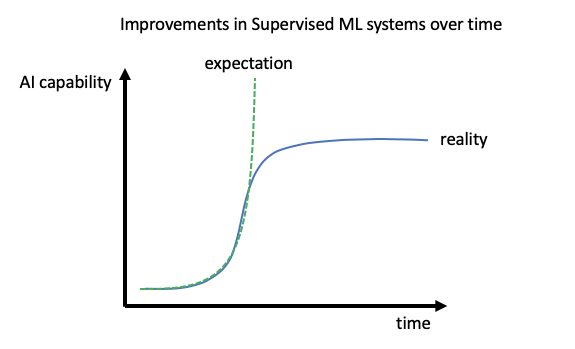

Contrary to the Moore’s Law expectation, practitioners assert that progress in machine learning applications isn’t exponential… it’s sigmoidal, an S-curve.

Last year, Starsky Robotics (one of the earliest self-driving truck startups) eventually shut its doors for good. Despite a string of excellent milestones and firsts for the autonomous vehicles (AV) space, Starsky’s CEO Stefan Seltz-Axmacher credits unmet promises and overinflated expectations as the company’s kiss of death.

A common joke in the industry is the first 90% of progress in a machine learning application is almost always pretty achievable… it’s the next 90% that’s so tough.

All models work well in small, controlled environments. But real-world deployments bring a plethora of edge cases you can’t always simulate, and depending on the required safety bar, you may not be able to resolve these cases in a window investors or executives are comfortable with.

The below chart captures this dilemma pretty well. The horizontal lines represent hypothetical human-equivalent performance levels.

If L1 is the line of human equivalence, then leading AV companies merely have to prove safety to be able to deploy… If L2 is the case, the bigger teams are somewhere from $1–25b away from solving this problem… If, however, L3 is the line of human equivalence it’s unlikely any of the current technology will make that jump.

Whenever someone says autonomy is 10 years away that’s almost certainly what their thought is.

The investing community’s exponential pitch creates a misalignment with operators when the reality of the S-curve comes to light.

Starsky’s failure was not one of competence or technical ability. Safety (both real and perceived) is one of the biggest hurdles for AV, and Stefan’s team invested nearly two years doing nothing but safety engineering. Pursuing that path with realistic milestones and centering on a business model that (though lower than traditional software margins) at least got the wheels turning was a strategy investors found little enthusiasm for.

It took me way too long to realize that VCs would rather a $1b business with a 90% margin than a $5b business with a 50% margin, even if capital requirements and growth were the same. And growth would be the same. The biggest limiter of autonomous deployments isn’t sales, it’s safety.

I wholeheartedly recommend reading Stefan’s excellent blog post in full, and to keep his thoughts in mind anytime you see exponential curves or hear the words “AI is taking over the world.” There are lots of low-hanging fruit, but overinflated expectations and runaway hype trains recklessly divert capital and resources from the most feasible and high ROI projects to the sexiest stories that are easier to sell.

A Personal Note

Early in my career, I made a lot of the same mistakes mentioned above. Coming from a business background rather than engineering, I’ve hyped the potential of some projects to executive audiences as soon as we determined a clever way to apply machine learning to the problem. This creates unfair expectations on your team and converts what might have been a few small wins into one big promise you might not live up to.

I’ve found the most success by appreciating the limitations of our solution, narrowing the scope, and laying out a stepped roadmap for how to eventually get to the Big Idea that executives and investors want. For the 90% of businesses that aren’t yet finding success with applied AI, that’s what I recommend: start small.

Bonus Content

These are articles, reports, videos, and more worth checking out that didn’t get a featured mention this issue.

📚 Reading

Introducing Reinforcement Learning for Optimizing the Player Lifecycle (Unity)

Don’t yet know what reinforcement learning (RL) is and too afraid to ask? Unity’s case study is a great primer on how the technology is used to maximize customer lifetime value (LTV) by keeping players engaged and their wallets open.

The Semiconductor Supply Chain: Assessing National Competitiveness (CSET)

Required reading for anyone without an industry background to get familiar with the hardware powering machine learning applications (CTRL+F “AI ASICs”).

National AI Initiative Office Launched by White House (FedScoop)

The US formalizes a commitment to funding AI research of national interest and importance.

Why the OECD Wants to Calculate the AI Compute Needs of National Governments (VentureBeat)

“Compute” is a generalized term for computing capacity used to train and deploy machine learning models. “If we can measure how much compute exists within a country or set of countries, we can quantify one of the factors for the AI capacity of that country.”

China’s STI Operations: Monitoring Foreign Science and Technology Through Open Sources (CSET)

The US relatively neglects open-source intelligence (OSINT) while China uses it as the “INT of first resort.” This contrasts extends to science and technology intelligence (STI), used for assessing foreign capabilities in applied research and engineering.

The U.S. AI Workforce (CSET)

CSET defines a taxonomy for AI labor supply sizing. Part 1 of a 3 part series.

This Chinese Lab is Aiming for Big AI Breakthroughs (WIRED)

Take a tour through one of the newest government-sponsored research labs, the Beijing Academy of Artificial Intelligence. Spoiler alert: they’re working on a GPT-3 / Switch Transformer competitor.

Amazon opens Alexa’s advanced AI for firms to build their own assistants (Business Standard)

Intelligent assistants in cars, apps, real estate, games, and edge devices galore.

🎧 Listening

Dan Wang on China’s Mission to Be a World Leader in Semiconductors (Odd Lots Podcast)

Dan Wang of Gavekal Dragonomics gives on-the-ground perspective of China’s efforts to de-risk itself from the global semiconductor supply chain.

🎥 Watching

People’s Republic of Desire (Documentary)

At the intersection of technology and culture, “A digital fantasy world where culture has been abandoned in favor of commerce.” Timely for the Kuaishou IPO.

About me

I am an AI Product Manager at Spiketrap, a seed-stage language processing company where I focus on applied strategy, analytics, and new verticals for our NLP stack. Previously, I spent ~4 years at Uber integrating machine learning techniques into high-growth marketplaces like Uber Eats, Uber Elevate, and JUMP. I started my career in technology investment banking at Credit Suisse, specializing in fintech, blockchain, and Chinese internet companies.

I’m studying Artificial Intelligence part-time at Stanford, and graduated from Georgetown University with a BS in Finance and Economics.

Thanks for reading!

Any suggestions or items you want to see? Contact me on Twitter @rydcunningham or shoot me an email at rydcunningham@gmail.com.

Cunningham - great content, and yearning for more! Would expect nothing less from the only guy at the TC who could keep up with the Oracle (Scott) and Perlow. Question, what are your thoughts on the TSLA FSD 10K, make the investment now, wait for the subscription, or until viable AV competition comes to market (GM Cruise, some other LiDAR based system)?

Good first issue. I was actually able to read and understand the content. The charts that you used to illustrate points were good choices and I really appreciated how you highlighted the difference between the fundraising hype (exponential growth forever) which sets unrealistic expectations and reality (S-curves). Self driving cars are going to be L3 for a long time in human years as well as IT (dog) years.